WARNING:

This report contains descriptions of violence against women.

Twitter has a responsibility to take concrete steps and actions to address any human rights abuses occurring on its platform.

Part of this requires Twitter to have policies that are compliant with international human rights standards and to ensure that it has a robust reporting process that enables users to easily report any instances of violence and abuse. Yet, despite having policies that explicitly state that hateful conduct and abuse will not be tolerated on the platform, Twitter appears to be inadequately enforcing these policies when women report violence and abuse.

At times, it fails to even respond to women who have taken the time to report abusive content about what action, if any, has been taken.

Twitter’s inconsistency and inaction on its own rules not only creates a level of mistrust and lack of confidence in the company’s reporting process, it also sends the message that Twitter does not take violence and abuse against women seriously – a failure which is likely to deter women from reporting in the future.

I gave up on reporting to Twitter a long time ago.

Zoe Quinn, Games Developer

How did Twitter respond when you reported the abuse?

Twitter’s Due Diligence Responsibilities

According to the United Nations Guiding Principles on Business and Human Rights, Twitter must identify, prevent, address and account for human rights abuses in its operations. In doing so, it must express and embed its commitment to human rights both through its policies and practices. The company must take a number of steps to meet its human rights responsibilities – the first being the development of policy commitments that incorporate and recognize international human rights standards.

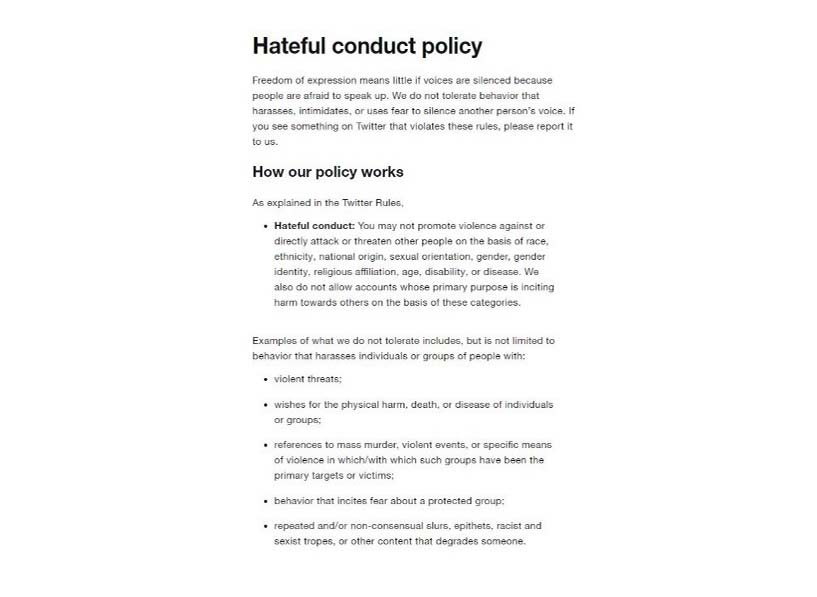

Twitter Policies

Twitter currently has no publically available human rights policy stating its commitment to respect human rights in its operations. The UN Guiding Principles state that such a policy must also identify who is in charge of overseeing, implementing and monitoring Twitter’s human rights commitments. Twitter does have a number of policies related to addressing violence and abuse on the platform. In December 2015, Twitter introduced its policy on ‘Hateful Conduct and Abuse’. More recently, following the suspension of actor Rose McGowan’s account in November 2017 and #WomenBoycottTwitter going viral, current CEO Jack Dorsey promised a “more aggressive stance” on tackling abuse. He expedited the expansion and enforcement of Twitter rules to include unwanted sexual advances, intimate media, hateful imagery and display names, and violence.

The Hateful Conduct and Abuse policy explicitly states that users ‘may not promote violence against or directly attack or threaten other people on the basis of race, ethnicity, national origin, sexual orientation, gender, gender identity, religious affiliation, age, disability, or disease.

The policy also provides an overview of the types of behaviours that are not allowed on the platform and encourages users to report content on the platform that they believe is in breach of Twitter’s community standards. However, Twitter does not state who is responsible for the oversight and implementation of the policy.

When a reported tweet or account is found to be in violation of the Twitter Rules, the company states it will either: require the user to delete prohibited content before posting new content or interacting with other Twitter users; temporarily limit the user’s ability to create posts or interact with other Twitter users; ask the user to verify account ownership with a phone number or email address; or permanently suspend account(s). Twitter has a ‘philosophy’ on how it enforces its own rules but it does not provide concrete examples or guidance on this. It therefore remains unclear how the category or severity of violence or abuse reported to Twitter is assessed by company moderators to determine which of the aforementioned resolutions are applicable. Twitter does have a specific policy on abusive profiles that states accounts with ‘abusive profile information usually indicate abusive intent and strongly correlate with abusive behavior’ and describes how the platform reviews and enforces action against account profiles that violate the Twitter rules.

Twitter is taking some pro-active steps to address violence and abuse on the platform. In a letter to Amnesty International (hyperlink letter 14 February 2018), Twitter detailed how it is using machine learning to identify and collapse potentially abusive and low-quality replies so that the most relevant conversations are brought forward. It also stated that such low-quality replies will still be accessible to those who seek them out. Twitter also stated (hyperlink letter 14 February 2018) that they are using machine learning to “bolster their approach to violence and abuse in a number of areas, from better prioritising report signals to identifying efforts to circumvent suspensions” – however, no further details or assessments were given about these specific efforts.

The Reporting Process

Twitter’s policies, for the most part, contain definitions that could be used to address the range of violence and abuse that women experience on the platform. However, in addition to incorporating specific human rights policy commitments, Twitter also has a responsibility to ensure that women who experience violence and abuse on the platform have access to an effective complaints mechanism that they know about, are easily able to use and also trust.

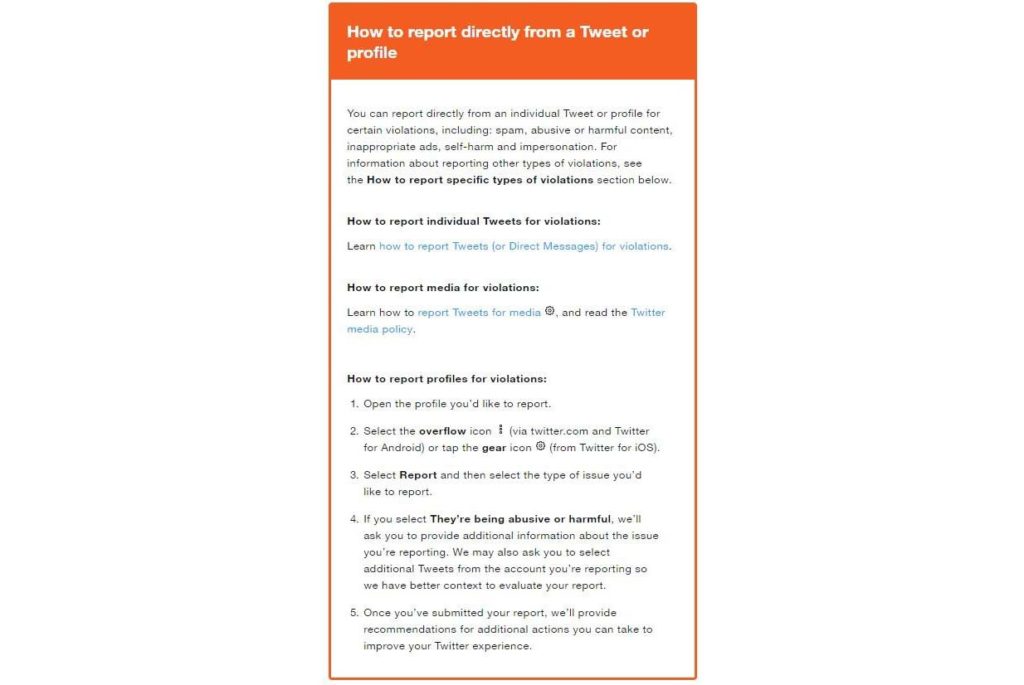

Twitter’s reporting system relies on users to report violence and abuse on the platform. Individuals can report abusive or harmful content directly from a tweet or profile.

How Twitter Interprets and Implements its Rules

Twitter states that it takes a number of factors into account when determining to take enforcement action, such as whether “the behaviour is directed at an individual, group or protected category of people; the report has been filed by the target of the abuse or a bystander; the user has a history of violating our policies; the severity of the violation; the content may be a topic of legitimate public interest.” However, the company does not clarify how these different factors determine which level of enforcement option moderators will apply to specific reports of violence and abuse.

When Twitter determines that a tweet is in violation of the rules, it requires the user to delete the specific tweet before they can tweet again. The user will then need to go through the process of deleting the violating tweet or appealing for review if they believe Twitter made an error. In October 2017, Twitter committed to notify users whose accounts were locked for breaking the Twitter rules against abusive behaviour with details about the offending tweet and an explanation of which policy it violated, via email and in-app. On 9 March 2018, Twitter stated that they will now also email account owners whose Twitter accounts have been suspended both the content of the tweet and which rule was broken to share more context around what led to the suspension Whilst these improvements are a welcome step in Twitter communicating the types of content it finds to be in breach of its own policies on the platform, the company continues to fail in exercising wider transparency and communicating to its hundreds of millions of Twitter users about the behaviour it finds unacceptable on its platform.

Twitter does not share with users specific examples of content on the platform that would violate the Twitter rules nor does it provide specific information on how content moderators are trained to interpret the Twitter rules when dealing with reports of abuse. In response to a request from Amnesty International about how content moderators are trained to understand and enforce rules against abusive behaviour on the platform, Twitter stated (hyperlink letter 14 February 2018), “Our review teams are empowered to use their judgement and take appropriate action on accounts that violate our rules.” This lack of clarity creates an uncertainty about what Twitter will act on and therefore leads women to place less faith in the reporting system.

Amnesty International also asked Twitter to share details about the content moderation process such as figures of the number of moderators employed per region and details about how content moderators are trained on gender and other identity based discrimination, as well as international human rights standards more broadly. Twitter declined to share this information and stated (hyperlink letter 14 February 2018), “We are well aware that ensuring our teams are taking into account gender-based nuance in behavior is essential. Every agent handling abuse reports receives in-depth trainings around the nuanced ways historically marginalized groups are harassed, intersectionality, and the impact that abuse online has on people’s everyday lives, across a range of issues.” However, Twitter did not share details about what this training includes, nor did they share the number of moderators employed per region and language.

How Twitter Responds to Reports of Abuse

Twitter does not publicly share any specific data on how it responds to reports of abuse or how it enforces its own policies. Amnesty International requested that Twitter share disaggregated data about the company’s reporting process and response rate on three separate occasions but our requests were refused. Twitter stated in their response (hyperlink letter 14 February 2018) that the absolute numbers of reports and the proportion of accounts that are actioned can be both uninformative and potentially misleading. In addition, they stated that users regularly report content with which they disagree or, in some cases, with the direct intent of trying to silence another users’ voice for political reasons. Although context is incredibly important to understand in the reporting process, it does not negate Twitter’s human rights responsibilities to be transparent in how it is dealing with reports of violence and abuse on the platform. The lack of meaningful and disaggregated data leaves little information by which to assess how the reporting process is working for users who report violence and abuse to the platform.

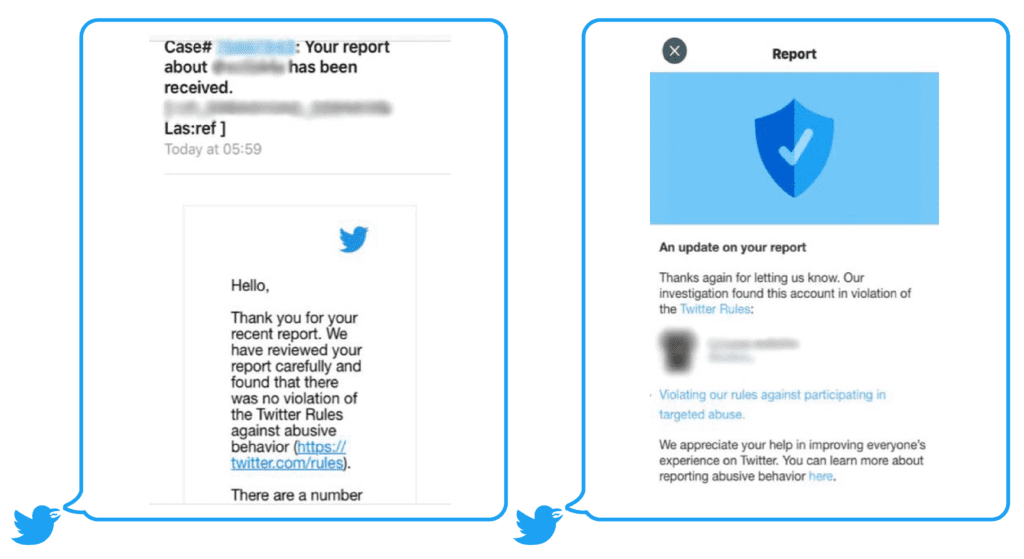

Twitter also states that it can take up to 24 hours for moderators to confirm receipt of a report but it does not explicitly guarantee that it will respond to reports of abuse nor does it stipulate how long it will take to respond to reports. It also does not set any public benchmarks or targets to record or improve response times to reports of abuse. There are currently no features on the platform for users to check on the status of any reports made to Twitter. In January 2018, Twitter introduced its latest reporting feature which provides an in-app notification to users about the progress of any submitted reports and specifies which particular rule an account or tweet is found to have violated. Although such a feature, in principle, provides users with more access to decisions on reports that have been made and greater transparency about which Twitter rule has been broken, this update is only helpful when Twitter makes a decision on reports of violence and abuse.

Twitter also states that users can appeal a decision based on their review of a report of violence and abuse if the user believes that they made an error. A detailed overview of the appeals process, including an explicit commitment to respond to all appeals or a timeframe of when to expect a response is not included in any of Twitter’s policies. Additionally, although Twitter’s policies state that users can report violence or abuse on the platform that they see happening to users, it does not seem to be investing in awareness-raising campaigns to ensure that users know that they are able to do so.

Amnesty International’s online poll showed that many women feel that Twitter’s response to online abuse has been inadequate. 43% of women polled in the UK and 22% of women polled in the USA, who are Twitter users, stated that the company’s response to abuse or harassment was inadequate. Almost 30% of women across all countries polled, excluding Denmark, who are Twitter users stated the company’s response to abuse or harassment was inadequate. These figures show that Twitter has a lot more work to do when it comes to making its reporting mechanisms more effective for women who experience violence and abuse on the platform.

Women’s experiences: Inconsistent enforcement of Twitter Rules

The Twitter rules are meant to provide guidance and clarity on which behaviours the company deems acceptable on the platform. Although Twitter’s hateful conduct policy covers many forms of abuse that affect women rights, it means little for women on Twitter if such policies are not consistently enforced in practice.

UK Politician Lisa Nandy told Amnesty International that she has little faith in Twitter’s reporting process after a tweet she believed was threatening was found not to be in breach of the Twitter rules. She recounted,

“…The next tweet I got was from a guy who said that every time he saw my face it made his knuckles itch, so I thought, ‘Well that’s not right, is it?’. So I pressed Report and straight away I got this automated response saying ‘We will investigate this and get back to you as soon as possible’ and I thought that was brilliant. Then, about two hours later, I got a message saying ‘We’ve looked at this and we don’t consider it violated our guidelines around abuse so no further action will be taken’…. I thought – well this is just a total waste of time then, isn’t it?”

In January 2018, UK journalist Ash Sarkar tweeted a screenshot of abuse that she had received on Twitter which was found not to be in violation of the community standards. The racist and sexist language explicitly used in this tweet demonstrates how, even when reported, abusive content can remain on the platform due to Twitter’s inconsistent interpretation and enforcement of its own rules.

Other women interviewed by Amnesty International shared similar concerns about Twitter’s failure to protect its users online when they report abuse. UK journalist Allison Morris told Amnesty International that she feels Twitter has let her down after experiencing continuous and targeted harassment on Twitter. Twitter’s policy states that “You may not engage in the targeted harassment of someone, or incite other people to do so. We consider abusive behavior an attempt to harass, intimidate, or silence someone else’s voice.”

As a verified Twitter user, Allison has access to additional features when filing a report on Twitter and is allowed to include a short explanation as to why she believes a specific tweet is in breach of Twitter’s community standards. She told us,

“Twitter, I find, don’t remove anything. I think I’ve maybe managed, out of reporting probably over 100 posts to Twitter, I think they’ve removed two – one was a threat and the other had a pornographic image. There are 50 or 60 posts where I have specifically explained the tweets were malicious and the person that I believe is sending them has already been criminally convicted [for harassment but they won’t remove any of the tweets.

Twitter says they will remove any accounts which are specifically set up to target a person. But I’ve had accounts set up where a person has only posted three times, all three posts were directed at me and were malicious and had comments about my children and my father who is dying. When I reported to Twitter, they said it didn’t breach community standards.”

In January 2018, Allison reported yet another account which she believed was in violation of Twitter’s rules on ‘targeted harassment’. Twitter initially found that the account was not in breach of their community standards on ‘targeted harassment’. However, after Amnesty International contacted Twitter later that month about the specific account to clarify how the rules on ‘targeted harassment’ are interpreted, the account was found to be in breach of Twitter’s rules upon further investigation by the company.

Report of same abusive tweet found to be in violation of Twitter’s rules following contact by Amnesty International (right)

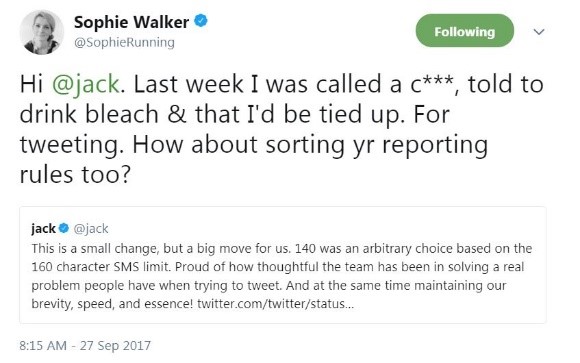

UK Politician Sophie Walker also spoke about her disappointment in Twitter failing to take violence and abuse against her seriously. She told Amnesty International,

I have had tweets saying ‘We are going to tie you up, we are going to make you drink bleach, you will be sorry when you are in a burkha’. I have also had someone incite others to rape me, with the words: ‘Who wouldn’t rape Sophie Walker?’ When I reported these to Twitter, the response came back so quickly it was almost like an ‘out of office’ reply. It was so fast that it felt automated, rather than considered. They said: ‘We’ve investigated and there is nothing to see here.’ I’ve tried escalating the reports, but the reply comes back just as fast and just the same. In some respects, Twitter’s response to abuse is more hurtful than the abuse. It feels like they are saying: ‘You’re on your own if you participate in this forum.”

UK writer and activist Laura Bates also spoke about the discrepancies between Twitter’s policies and practices and how reporting abuse directly to more senior Twitter staff was the only way she received results. She explains,

“Social media companies aren’t stepping up and being held accountable for protecting the safety of their users. They talk a good game when something comes up in the press, but they’re not taking that action. In my experience of reporting accounts to Twitter, there’s a safety gap in terms of how their terms and conditions — which are quite vaguely worded — are interpreted. When I reported things to Twitter, it very rarely resulted in anybody being suspended. But when I was put in touch with someone who was higher up in the company, they took action and removed the harassment.

I think that’s significant because it shows that there’s a real gap between the small number of people who are engaged with these tech companies and everyday users. And it’s really problematic that social media companies are only taking action when they’re under pressure, because that amplifies already privileged voices and continues to push marginalized voices off these platforms.”

Sana Saleem, a Pakistani journalist who has been living in the USA for the last two years, also spoke about the lack of consistent enforcement she has witnessed after reporting abuse on the platform. She explained,

“It’s really weird. When I was working in Pakistan – and reporting accounts as abusive – they were being taken down and suspended. Here in the US, hardly ever has an account been suspended. I receive a lot of hate from Neo Nazis and white supremacists and Twitter hasn’t taken it down.”

UK journalist Hadley Freeman also spoke about the inconsistency of Twitter’s reporting system. She told us,

“Sometimes I report abuse to Twitter but most of the time it does nothing. Twitter is really unpredictable about what it considers abuse. I could report a virulently abusive message and they say it doesn’t violate their guidelines. And then I’ll report something relatively minor and they’ll take action.”

UK Politician Naomi Long also spoke about Twitter’s failure to take reports of abuse against her seriously. She told us,

“In respect of reporting abuse on Twitter I think it’s a wholly ineffective process – I have to be honest. I have reported abuse and unless it is very specific, they don’t take it seriously.”

The inconsistent enforcement of its own policies reinforces the need for Twitter to be far more transparent about how it interprets and enforces its own community standards. It is also important to note that an increased level of transparency from Twitter about the reporting process benefits all users on the platform. One way to ensure that Twitter is a less toxic place for women is to clearly communicate and reinforce to users which behaviours are not tolerated on the platform and to consistently apply its own rules.

Women’s Experiences: Twitter’s Inaction on Reports of Abuse

Many women who spoke to Amnesty International told us that when they report abuse on Twitter it is often met with inaction and silence from the platform. These experiences reflect a similar trend found in a 2017 study by the Fawcett Society and Reclaim the Internet. The study reviewed a range of abusive content on Twitter which was against Twitter’s policies and then reported to the platform by anonymous accounts. One week after the tweets were reported, the posts remained on Twitter, the accounts which reported the abuse received no further communication from the platform and no action had been taken on any of the accounts that were reported.

Multiple women interviewed by Amnesty International echoed these findings. US journalist and writer Jessica Valenti said,

“I have not gotten a lot of movement on anything unless it is a very direct, obvious threat. That’s part of the problem. When someone says ‘Someone should shoot Jessica in the head’, that’s very obvious. But harassment can be savvy and they know what they can say that’s not going to get them kicked off a site or not illegal so I don’t even bother with stuff like that…But I’ve reported tons of stuff and nothing happens”.

Scottish Parliamentarian and Leader of the Opposition Ruth Davidson shared a similar experience. She explained,

“Have I bothered to report? I think in the beginning I did and not much happened, so I don’t know, maybe it isn’t the best example to set. I think that if we do want platforms to act in a more robust manner then we’ve got to keep up the level of complaint”.

US reproductive rights activist and blogger Erin Matson also told Amnesty International how she never heard back from Twitter after reporting abuse to the platform. She told us,

“Most of the harassment I received is on Twitter. It’s very fast paced and people send you horrible images. One time I tweeted that white people need to be accountable towards racism and I started getting images of death camps….I’ve reported people to Twitter but I never heard back”.

In 2015, US organization Women, Action, Media! (WAM!) was temporarily elevated to ‘Trusted Reporter Status’ by Twitter. Being a ‘Trusted Reporter’ allowed WAM! to assess and escalate reports of abuse (as necessary) to Twitter for special attention. US writer and activist Jaclyn Friedman, who was also the Executive Director of the organization during this time, explained that many of the women who reported abuse through WAM! said they had previously made reports of abuse to Twitter but never heard back from the platform. Jaclyn explained,

“…The things that stays with me…is just the number of people we heard from who said ‘I reported this over and over and over and got no response’ – and that we were able to get a response. That gap, I think, is really instructive.”

Jaclyn also shared multiple examples of abusive tweets that she has reported to Twitter, but which remain on the platform.

Rachel*, a young woman in the UK without a large Twitter following, told us about her experience of reporting abuse.

“I reported the abusive tweets and accounts. From that, one account got suspended. Everything else I reported to Twitter, they said, we are taking your complaint seriously and then nothing else was done. There was no further contact from Twitter. There was nothing.

It almost feels that when you are filling in the reporting questions that they don’t believe you. It’s like a recurring theme with women – people don’t instantly believe you.”

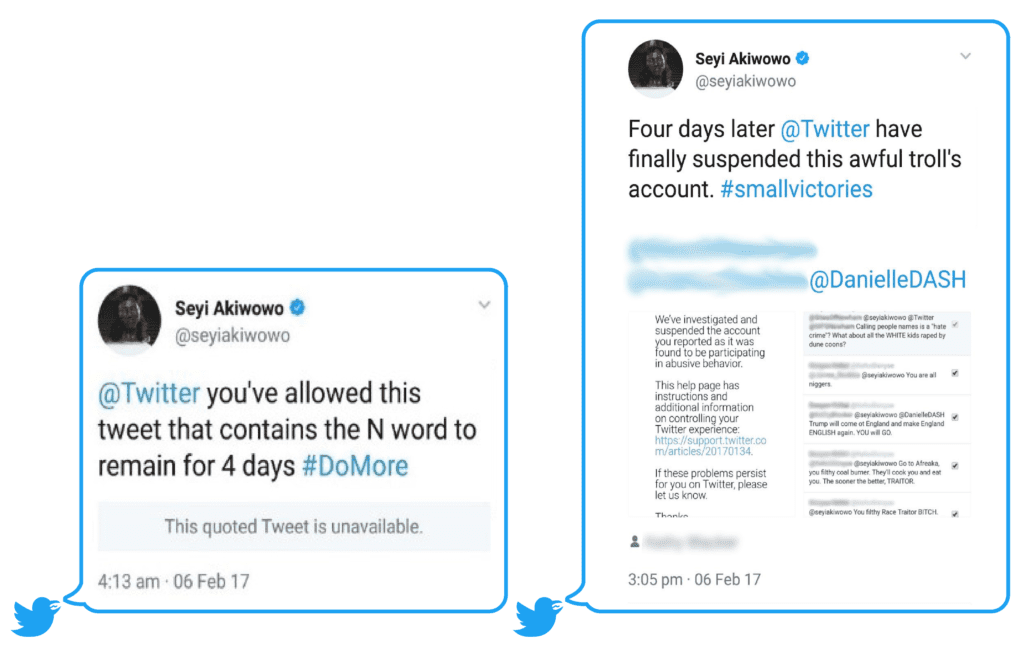

UK Politician and activist, Seyi Akiwowo explained to Amnesty International how she also felt let down by Twitter when they initially failed to take any action on the multiple reports she made after receiving a torrent of racist and sexist abuse in February 2017. She recounted,

“I reported around 75 comments on both YouTube and Twitter and did not receive feedback or acknowledgment. It took around two hours to go through the comments and it was really upsetting.

Twitter never actually contacted me when I reported it – they only started suspending those accounts and deleting those tweets after I appeared on the ITV’s London Tonight and did media interviews with BuzzFeed and the BBC. So if I didn’t go into fighter mode and make media appearances and gain public support I very much doubt there would be any action from Twitter.

I was really frustrated with] their lack of response and that is what led me to start Glitch! UK. At the time, I felt like Twitter were leaving these tweets there for me to be abused and there was no one to help me. I felt kind of betrayed. I feel silly about saying that – it’s obviously just a company, they don’t know me owe me anything…but, actually, they do. For it to turn like that and to be abandoned by Twitter at my time of need, my hour of need, it was just really sad. I was more frustrated at feeling let down by Twitter than these idiots that don’t know me calling me the N-word.”

Women Stop Reporting Abuse

One of the most pernicious impacts of the combination of inaction and inconsistency in responding to reports of abuse is the detrimental effect this has on women reporting experiences of abuse in the future. Women who are the targets of abuse bear the burden of reporting it. This not only takes time, but also takes an emotional toll on women. When women have had – or hear of people who have had – negative experiences reporting abuse to Twitter, they are often less likely to feel it is worth the effort to undertake the work of reporting abuse.

US abortion rights activist Renee Bracey Sherman explained,

“I am way over Twitter. If I am dealing with a lot of hate, I can’t bear to be in there, so I don’t even report it.”

US activist Pamela Merritt stressed how exhausting the reporting process can be after experiencing abuse on the platform. She said,

“When possible, I report it. By possible, I mean if I have the time and the emotional bandwidth to be disappointed about the report. In my experience, they rarely take action. I have only ever had them take one report seriously.”

US activist Shireen Mitchell told us,

“I don’t bother reporting anymore for myself because it doesn’t matter. All the solutions they came up with are ridiculous. The problem is they do not have enough diverse staff – enough to understand what the threats look like for different groups. They think these are jokes and they have allowed this to be part of the discourse.”

When Twitter’s reporting processes fail women who report abuse, others may be dis-incentivized from attempting to use the processes. UK journalist Siobhan Fenton told us,

“I wrote an article about transgender rights a couple of years ago and someone on Twitter sent me an image of a transgender woman who had been raped and killed and said that the same thing should happen to me. I think I mentioned it to a few friends at the time, just for support, but it didn’t occur to me to report it to Twitter itself because I know that there are journalists who have experienced online abuse and whenever they’ve reported things to Twitter they almost invariably don’t get a positive response and the materials tends to stay up.”

Twitter has a responsibility to ensure that any potential human rights abuses – such as the silencing or censoring of women’s expression on their platform – are addressed through prevention or mitigation strategies, and the reporting process is a key factor in this. However, in order for the reporting process to work effectively, Twitter must be much more transparent in how it interprets its own rules. It is also important to note that most Twitter users do not have verified accounts, and as a result are unable to provide any additional context or a written explanation when they report a tweet or account as abusive.

Given the complexities of assessing abuse in different regions and the importance of context when determining whether certain content is abusive, providing a rigid definition of what constitutes online abuse is not a simple task. However, although there are ‘grey areas’ around the parameters of online abuse– this should not be an excuse for Twitter’s inaction and inconsistency in dealing with reports of abuse. It would be much easier to tackle the ‘grey areas’ of abuse if Twitter were, first and foremost, clear about the specific forms of violence and abuse that it will not tolerate.

A paper by the Association for Progressive Communications on Due Diligence and Accountability for Online Violence against Women recommends that companies should create appropriate record keeping systems specific to violence against women and classify and share the ways in which they have responded to it. Ultimately, Twitter’s lack of transparency about how it interprets and enforces its own rules undermines the importance of human rights that are implied in its policy commitments.

Twitter’s Human Rights Failures

In its response to Amnesty International (hyperlink letter 15 March 2018 ), Twitter highlighted several positive changes to their policies and practices in response to violence and abuse on the platform over the past 16 months, including a tenfold increase in the number of abusive accounts against action has been taken.

Amnesty International’s findings indicate that Twitter’s inconsistent enforcement and application of the rules as well as delays or inaction to reports of abuse when users breach the Twitter rules mean that the company’s response is still insufficient.

Together with the lack of specific human rights policy commitments and seemingly ineffective reporting mechanisms, this clearly demonstrates a failure of the company to adequately meet its corporate responsibility to respect human rights in this area.

Based on Amnesty International’s research and publicly available materials, and given Twitter’s refusal on three occasions to disclose comprehensive data on the reporting process, Amnesty International’s conclusion is that these failures result in Twitter contributing to the harms associated with women’s experiences of violence and abuse on the platform.

It is worrying that a social media platform of Twitter’s importance does not appear to have adequate human rights compliant policies and processes in place to tackle this problem adequately, efficiently and transparently. If Twitter had disclosed the requested information about reports of abuse, this may have revealed additional insight that would have impacted our conclusion.